Cloud cost optimization has become one of the most important priorities for every organization in 2026. As organizations continue to scale their workloads across AWS, Azure, and Google Cloud, the cloud bill grows faster than the business itself.

It is important to note that cloud cost optimization is not just about saving money. It’s about spending wisely, improving efficiency, and ensuring that you get business value from every dollar you spend in the cloud.

This article’s aim is to gather the best practices for cloud cost optimization that not only provide some cost-saving hacks but also provide you with a practical, cloud-agnostic framework that you can apply to your own environment.

Cloud cost optimization in 2026 is no longer optional; it has become a discipline every cloud team must master. And this guide is your roadmap to do it right.

Why Cloud Cost Optimization Matters in 2026?

In 2026, organizations are running more dynamic environments than before, such as microservices, Kubernetes clusters, AI workloads, and serverless architectures. These technologies bring agility, but they can also make cost visibility extremely difficult.

Cloud bills are not only large, they’re confusing. With thousands of line items, variable pricing, and different billing models across providers, even experienced engineers struggle to identify where costs are leaking. This is exactly why cloud cost optimization is gaining focus.

The global cloud spend is growing over 20-21% year over year, and 20–40% of cloud resources remain underutilized or idle. These unused or over-provisioned resources are called zombie resources that drain budgets every month. When left unchecked, this will result in waste, poor ROI, and an increased carbon footprint.

Cloud cost optimization helps companies address these inefficiencies through visibility, right-sizing, automation, and continuous governance.

The goal is simple: achieve performance and reliability without overspending.

Challenges in Cloud Cost Optimization

Some of the most common challenges are:

- Lack of visibility: Teams do not know who is spending what or where costs are coming from.

- Idle or zombie resources: Unused volumes, forgotten load balancers, or unattached IPs continue to add charges.

- Over-provisioning: Teams give more CPU or memory than needed to handle traffic instead of actual usage, which wastes money.

- Decentralized accountability: Costs are not managed from one place; everyone handles their own part, and no one owns or monitors the total spend.

- Complex pricing models: Each cloud (AWS, Azure, GCP) has different pricing rules, making it hard to compare costs or pick the cheapest option.

The outcome/result? Cloud costs become unpredictable, budgets are exceeded, and optimization remains reactive instead of proactive.

What is Cloud Cost Optimization?

Cloud cost optimization is not about cutting costs blindly; it’s about spending smarter. The goal is to find the right balance between cost, performance, and outcomes. You can also say it’s not about running the cheapest infrastructure but about running the most efficient one for your workload.

So when we talk about cloud cost optimization, we mean designing, deploying, and running workloads in a way that maximizes resource utilization, avoids waste from idle or over-provisioned resources, uses automation and scaling policies wisely and keeps performance and reliability in balance with cost.

Cloud Cost Optimization vs. Cloud Cost Management

Many people use these terms interchangeably, but they’re not the same thing.

Cloud cost management is about tracking and reporting your costs. It’s the visibility layer; understanding where money is going, how much different services cost, and who is spending it.

Cloud cost optimization is about acting on that data. It means taking steps to reduce waste, right-size infrastructure, and plan resources better. Cost management tells you the “what,” while cost optimization tells you the “how.”

In practice, these two work together. Without strong cost management, you can’t optimize effectively. Without optimization, your cost management reports become expensive.

Core Principles of Cloud Cost Optimization

Cloud cost optimization requires a clear foundation built on five key principles: visibility, right-sizing, elasticity, automation, and governance. These are not just best practices; they are the daily habits of teams that run efficient, predictable, and well-optimized cloud environments.

Let’s break each one down for you:

1. Visibility & Transparency

Without visibility, you can't optimize. Having visibility is the first and most important step in cloud cost optimization. Every cloud provider has cost analysis tools, but knowing how to use those tools; that's what differentiates an efficient team from a wasteful one.

Visibility means having a clear, real-time picture of what services are driving costs, which teams or projects own those costs and how usage trends change over time.

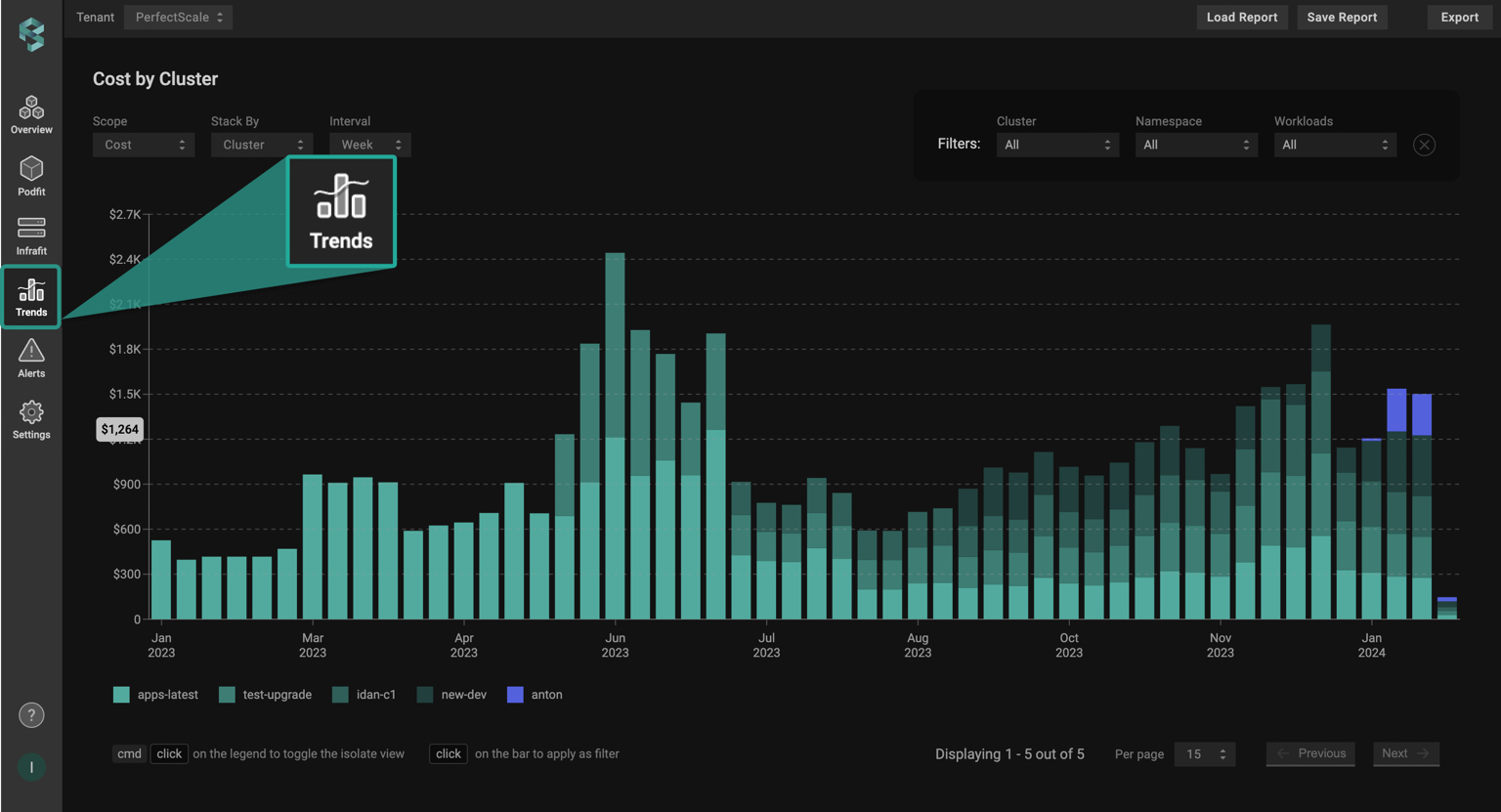

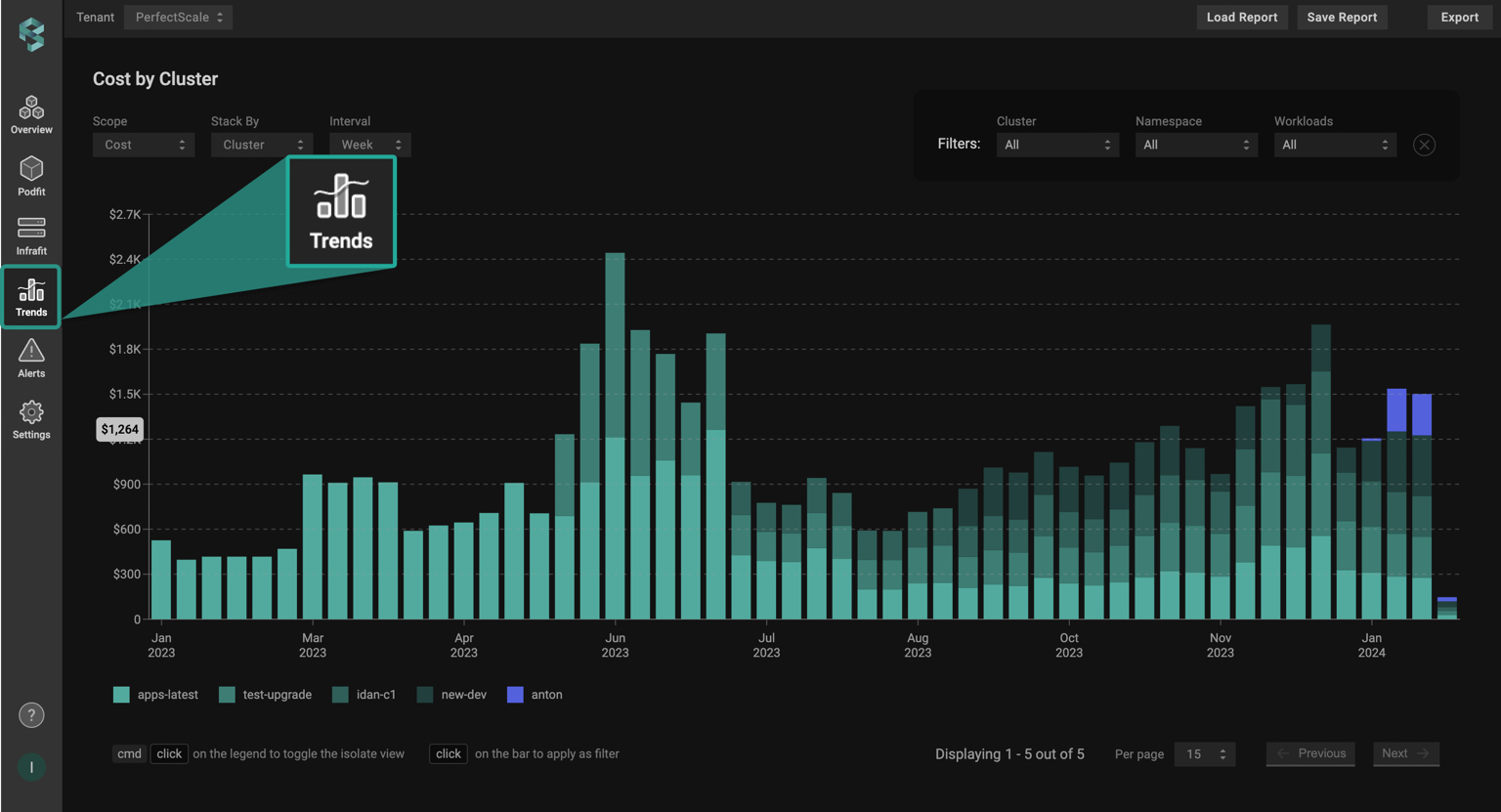

>> For multi-cloud and Kubernetes setups, PerfectScale Trends helps you visualize resource usage and spending patterns in real time, making it easy to spot inefficiencies and take quick action before waste grows.

2. Right-Sizing

Right-sizing is the foundation of efficient cloud cost optimization. It’s about running your workloads on the right-sized compute, memory, and storage resources- not too large, not too small.

As we shared, most organizations waste 20–40% of their cloud budget on over-provisioned resources. This is because teams choose instance types or sizes based on guesstimations instead of actual usage. Over time, these oversized VMs, containers, or databases quietly consume money, without adding value.

But right-sizing isn’t just about compute resources. Right-sizing also means looking at adjusting storage tiers, improving database capacity, and adjusting container limits.

The key mindset: provision for average load, scale for peak load.

3. Elasticity

The cloud has one of its greatest advantages in elasticity. When you are fully utilizing auto-scaling and on-demand provisioning, you get maximum power from cloud cost optimization.

Elastic workloads will automatically scale up when utilized at a high demand. However, once the demand drops or utilization decreases, they will scale back down to the minimal capacity. This allows you to ensure that you are never paying for capacity that is unused or sitting idle.

For containerized environments, elasticity extends to tools like Karpenter, Cluster Autoscaler, and KEDA, which optimize pod and node scaling in real-time.

Elasticity is what keeps costs aligned with actual business activity-pay for what you use, not what you expect to use.

4. Automation

When it comes to managing costs manually, it never scales. That’s why automation is a core pillar of modern cloud cost optimization.

The environments in the cloud change daily; new resources spin up, workloads move, or usage patterns change. Without automation, it’s impossible to maintain efficiency.

Automation helps in shutting down idle resources after working hours, can apply right-sizing recommendations, automatically enforcing budgets and alerts when spending crosses thresholds, and clean up unused volumes, IPs, or snapshots regularly.

Automation removes human error and optimizes consistently, without needing to constantly manage it manually.

It’s important to note that automation is also scary. Many organizations avoid automating cost optimization in their production environments because they fear it will cause disruption or performance degradation. That’s why responsible automation should always be focused on reliability first and on cost optimization second.

>>PerfectScale continuously enhances reliability and performance, optimizes resource utilization, minimises waste, and streamlines the management of your Kubernetes infrastructure with its automation.

5. Governance & Policies

Without governance, cloud cost optimization doesn’t last. Governance is about putting guardrails in place so that every new deployment follows cost-aware rules.

Good governance doesn’t block engineers; it guides them. It ensures cloud usage stays efficient without slowing innovation.

The end result is accountability; each team has a clear perspective of what they own and how much it costs. This is how you limit cloud waste and empower teams to optimize costs as a continuous practice in the cloud, instead of a one-time cleanup.

When you combine visibility, right-sizing, elasticity, automation, and governance, you get the complete picture of cloud cost optimization.

Best Practices for Cloud Cost Optimization

The best practices for Cloud Cost Optimization are:

1. Visibility & Accountability

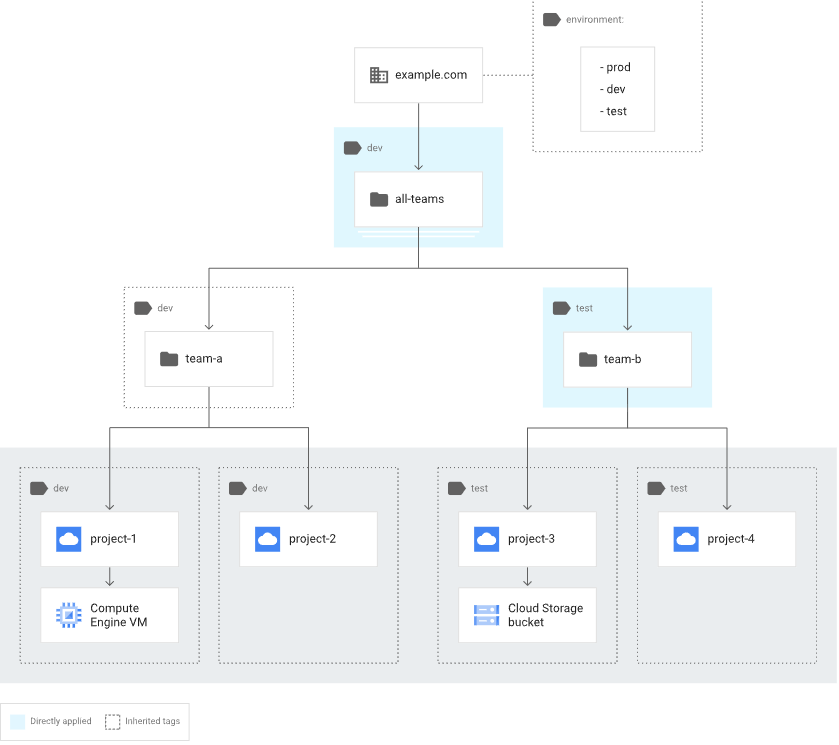

The easiest method for generating cost visibility is by implementing consistent tagging and labeling. The Tags (in AWS and Azure) or labels (in GCP) let you allocate spend by team, application, environment, or project.

When every resource, from a VM to a load balancer, carries the proper tags, you can easily group and analyze spending patterns. It is the way teams can discover hidden costs and trace them back to the right owner.

To maintain consistency, implement automated policies such as AWS Tag Policies, Azure Policy for Resource Tagging, or GCP Organization Policies. Automation here saves hours of manual cleanup and prevents tag drift.

Once tagging is in place, native cost management tools become the best, like AWS Cost Explorer, Azure Cost Management, and Google Cloud Billing Reports.

When teams have access to the same cost data, they can make better decisions.

PerfectScale complements this vision by helping Kubernetes teams gain clear visibility into cluster costs and real-time utilization.

2. Rightsizing Compute Resources

To determine right-sizing, it is critical to become data aware. The first step is to identify your underutilized compute resources, instances that consistently show low CPU, memory, or network usage. Each major cloud provider has several built-in recommendations to help with this:

- AWS Compute Optimizer evaluates utilization metrics and makes recommendations for smaller instance types or more efficient types of instances.

- Azure Advisor evaluates VM performance and gives recommendations for resizing or shutting down idle VMs.

- Google Cloud Recommender identifies over-provisioned VMs and suggests more suitable configurations.

Once you identify underutilized workloads, the next step is to choose the right SKU or instance family. Instead of sticking to general-purpose types, move to workload-specific options such as:

Compute-optimized instances for CPU-heavy workloads.

Memory-optimized instances for large databases or in-memory caching.

Storage-optimized instances for high I/O applications.

Another important consideration is modern architecture choices. If your workloads experience bursty or unpredictable traffic, containers and serverless computing can make a huge difference. Kubernetes, AWS Fargate, Azure Container Apps, and Google Cloud Run all can scale automatically based on demand, and you will pay only for what you actually use.

3. Optimize Storage Costs

The goal isn’t to store less; it’s to store smarter. Almost all public cloud providers offer multiple storage classes or tiers that are designed for different levels of access. The right selection will reduce costs without sacrificing performance or data availability.

Begin with determining how often your data is accessed. This is the foundation of storage tiering:

Hot storage: Data is frequently accessed (for example, user files or active app data).

Warm storage: Data is occasionally accessed (for example, monthly reports or logs).

Cold or archive storage: Data that is rarely accessed (for example, backups or compliance records).

Each of the clouds has its own tiers:

AWS: S3 Standard -> S3 Infrequent Access -> S3 Glacier Flexible Retrieval -> S3 Glacier Deep Archive

Azure: Hot -> Cool -> Archive tiers in Blob Storage

GCP: Standard -> Nearline -> Coldline -> Archive

By moving inactive data to lower-cost tiers, you can cut storage costs by up to 70% in many cases. Azure, AWS, and GCP all support automatic transitions through lifecycle management policies, so you don’t have to move files manually.

One of the most common sources of waste is also orphaned storage, old snapshots, unattached volumes, or temporary disks that remain after instances are deleted.

A regular cleanup process helps reduce this cost. It’s a small habit that brings huge savings over time.

4. Optimize Networking Costs

Networking costs are overlooked in cloud cost optimization. They can also quietly eat away at your budget.

Whenever data is sent out of a region or between availability zones, cloud providers charge you egress fees. These costs are small per GB, but at scale, they can become one of the biggest surprises on your bill. The main focus should be to monitor, minimize, and localize your data transfers wherever possible.

Your first step should be to analyze how your applications move data. For example, if a workload in one region repeatedly fetches data from another, you’re paying for cross-region egress. As another example, traffic between availability zones inside the same region can incur extra costs in AWS and Azure.

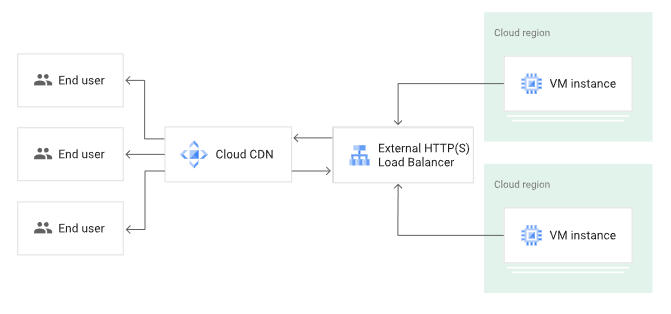

Another effective means for reducing networking costs is to minimize the outbound data transfers by using content delivery networks (CDNs) and caching layers. Instead of fetching data from your origin servers each time, cached content is delivered from the nearest edge location.

AWS CloudFront, Azure CDN, and Google Cloud CDN all reduce the egress charges by keeping frequently accessed data closer to users.

For APIs and web services, reverse proxies or application-level caching (like Redis or Cloudflare Workers Cache) will further minimize repeated data transfers.

If your architecture includes Kubernetes or microservices, consider internal caching strategies as well, such as Nginx, Envoy, or managed caches (AWS ElastiCache, Azure Cache for Redis, GCP Memorystore) can reduce unnecessary data transfers and improve application performance.

Running workloads across multiple regions may make sense for your application availability and reliability but it comes with a cost.

Always keep your database in the same region as compute to reduce replication costs. Use multi-zone architecture for redundancy instead of multi-region, unless you truly need it. Be sure you determine whether your workloads really need global replication or if regional scaling is sufficient.

Network optimization should be part of your ongoing cloud cost optimization. When you are continuously monitoring network traffic and designing with data locality in mind, you will build a cloud that’s not only faster and more reliable but also much more efficient in cost.

5. Commitment-Based Discounts

Also, a powerful strategy in cloud cost optimization is committing to long-term usage. All the major cloud providers provide predictable consumption with significant discounts, but the key is balancing commitment with flexibility.

AWS, Azure, and Google Cloud all have their own versions of the commitment-based pricing model:

AWS has Savings Plans and Reserved Instances (RIs), which can save up to 72% over the on-demand prices.

Azure provides Reserved VM Instances and Savings Plans, which work similarly by offering reduced prices for 1- or 3-year commitments.

GCP has Committed Use Discounts (CUDs), which provide up to 55% off in exchange for predictable compute usage.

Each of these models encourages you to commit to a certain amount of usage over time. However, you must be careful about overcommitting because it can put you into a position where you are "locked" into a capacity you don’t fully use, sometimes wasting money instead of saving it.

To find the right balance, start by analyzing your baseline workloads; those that run continuously and have stable demand. Commit those to long-term plans while keeping bursty or experimental workloads on-demand. This strategy keeps your environment cost-efficient and flexible.

The golden rule: commit only where it makes sense. Flexibility is still crucial in cloud cost optimization, especially when workloads are dynamic.

6. Use Spot and Preemptible Instances

If you’re looking for major savings in cloud cost optimization, spot and preemptible instances are unbeatable. They let you use spare compute capacity at discounts of 70–90% compared to on-demand pricing; perfect for workloads that can tolerate interruptions.

AWS, Azure, and GCP each offer their own versions: AWS Spot Instances, Azure Spot Virtual Machines and Google Preemptible VMs.

These are ideal for workloads like batch jobs, CI/CD pipelines, data processing, AI training, or other stateless services. Essentially, any job that can be resume or restart without major issues is a good fit.

To get the most from spot and preemptible instances, design your workloads with interruption tolerance in mind. Since these instances can be reclaimed by the provider at any time, automation and resilience are key.

Tip: In Kubernetes environments, Karpenter is an excellent choice. It dynamically provisions and replaces nodes using the most cost-efficient instance types available, including spot capacity. This allows Kubernetes clusters to maintain performance while minimizing spend.

7. Automate Scaling

Automation is the backbone of cloud cost optimization. Without it, teams either over-provision to stay safe or react too slowly when demand changes. Auto scaling guarantees that your compute resource automatically scale based on real-time workload needs while performance stays steady and costs are controlled.

Every major cloud provider has built-in scaling features:

AWS Auto Scaling Groups let you automatically add or remove EC2 instances based on load or metrics.

Azure VM Scale Sets manage groups of VMs that dynamically scale based on performance thresholds.

Google Instance Groups provide similar flexibility, allowing you to define scaling policies for more predictable cost and performance.

With these, your infrastructure grows during peak traffic and shrinks during inactive time. You only pay for what you use, a primary principle of successful cloud cost optimization.

Event-driven scaling takes traditional auto scaling to another step. Using tools like KEDA (Kubernetes Event-Driven Autoscaler) or serverless functions (AWS Lambda, Azure Functions, and Google Cloud Functions), your workloads can scale immediately in response to specific triggers such as message queues, API calls, or scheduled jobs.

8. Optimize Kubernetes & Containers

In Kubernetes, cloud cost optimization revolves around the balance of performance, reliability, and efficiency.

The best starting point is with right-sizing your container requests and limits. Many teams will give their pods more CPU and memory resources than needed, but this leads to overuse. Therefore, the team should simply review metrics regularly and adjust requests to reflect real usage. Awesome tools like PerfectScale make this easy by showing where containers are over- or under-provisioned.

The next step is to consider node auto-provisioning and bin-packing strategies. The use of bin-packing strategies will maximize node usage and will result in fewer nodes that are idle and are costing wasted dollars.

PerfectScale stands out by providing intelligent Kubernetes cost visibility, helping teams maintain balance between performance and spending in real time.

9. Governance & Guardrails

No matter how advanced your cloud cost optimization efforts are, they won’t last without governance. Governance provides the structure and rules that keep your cloud usage compliant and predictable.

Start with budgets and quotas. Each project or team should have specific spending limits to ensure that spending doesn’t get out of hand. Cloud-native tools such as AWS Budgets, Azure Cost Management, or GCP Budgets can provide teams with automated notices as they approach or exceed spending thresholds.

Next, implement Policies-as-Code to automate compliance. This can be done by using Infrastructure as Code (IaC) with tools like Terraform, combined with policy frameworks like OPA (Open Policy Agent), HashiCorp Sentinel, or Azure Policy, to help you apply consistent cost and governance rules across environments. These policies can prevent untagged resources, block high-cost instance types, or enforce lifecycle policies automatically.

Governance is not about restricting innovation it’s about creating a safe framework where teams can build freely without unexpected costs.

10. Cloud Cost Optimization Culture

A strong cloud cost optimization culture means making cost-awareness part of everyday DevOps. With FinOps practices in place, the finance and engineering teams collaborate to achieve operational performance and align costs with spend. When the entire team takes ownership of cloud costs, optimization is something that can happen continuously, not just as a one-day activity.

Multi-Cloud vs Single Cloud

A large number of organizations implement a multi-cloud approach for flexibility, resiliency, and freedom from vendor lock-in. However, the fact of the matter is that a multi-cloud strategy is not always a cheap option from a cost perspective. Each of the providers(AWS, Azure, and GCP) has its own pricing model, billing model, and discount model; all of these add to the complexity of, and management of, your operational and financial responsibilities.

When considering cloud cost optimization, the real question is: "Is there enough business value added via multi-cloud strategy to justify the overall management costs?”

Pros of multi-cloud: better risk diversification, better use of the best service from each vendor, and greater resiliency.

Cons: higher costs for data transfer (egress), lack of a single monitoring solution, and duplicated tooling.

If your workloads are associated with specific services from each cloud, then multi-cloud may benefit you. If managing spend is your primary focus, and your goal is to save more, a single cloud strategy(or primary-cloud with limited secondary use) will save more money and manageably optimize at scale!

Best Cloud Cost Optimization Tools

To automate savings, predict usage, and tie costs to business impact; you need specialized platforms built for optimization.

Below are some of the best cloud cost optimization tools in 2026:

1. PerfectScale

PerfectScale stands out by identifying underutilized resources, recommending right-sizing actions, and maintaining performance balance across clusters.

It continuously monitors CPU and memory, providing real-time insights into where capacity can be optimized without risking reliability. What makes PerfectScale unique is its ability to balance cost, stability, and performance automatically, helping teams achieve a healthy Kubernetes environment without constant manual tuning.

PerfectScale integrates smoothly across AWS EKS, Azure AKS, and GCP GKE, making it a cloud-agnostic choice for modern teams running containerized workloads. PerfectScale can help you reduce 50% to 70% of costs.

In a world where Kubernetes efficiency directly impacts cloud bills, PerfectScale is the automation layer every engineering team needs.

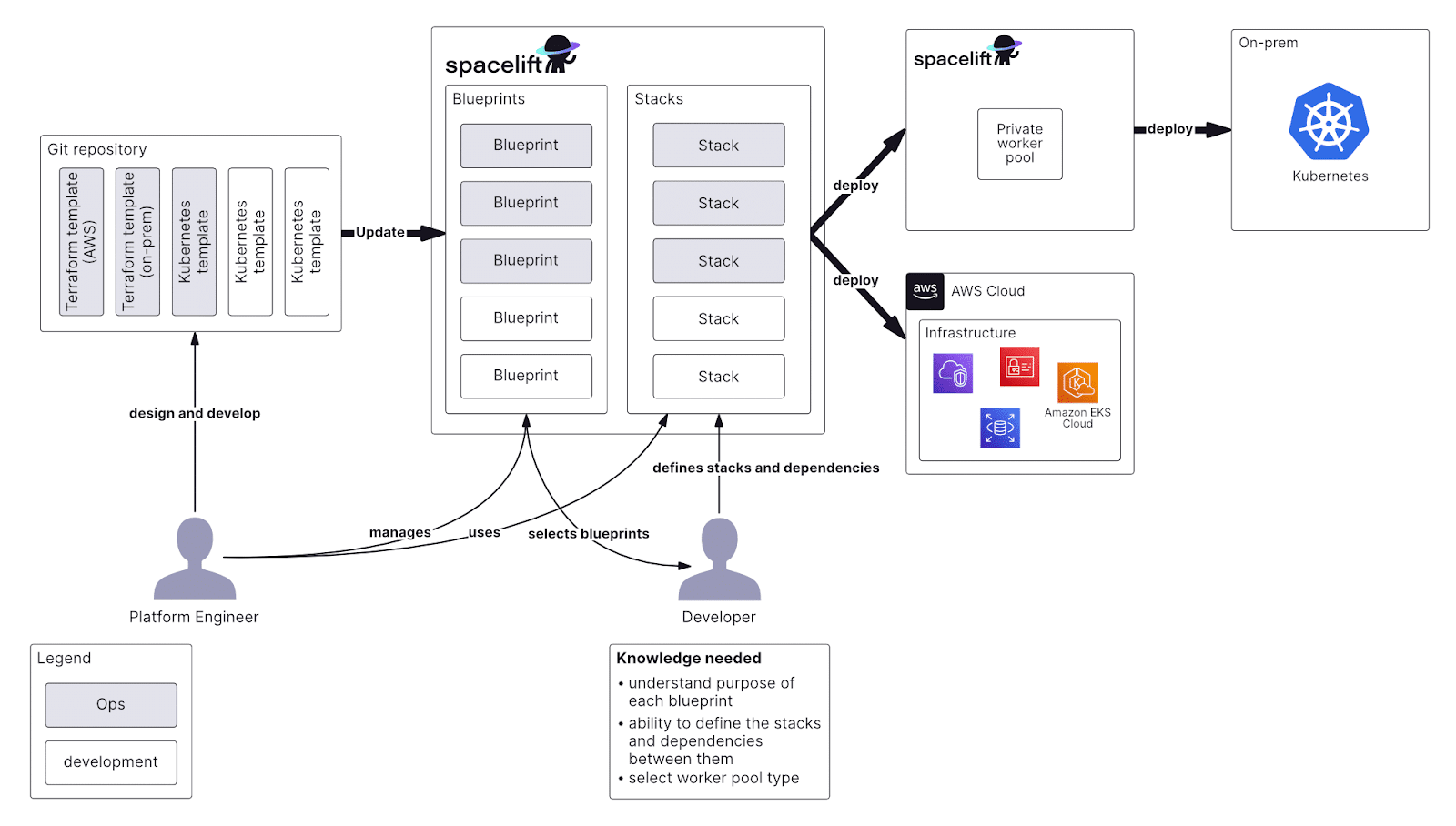

2. Spacelift

Spacelift brings infrastructure automation and policy-driven optimization together. By integrating with Infrastructure-as-Code tools like Terraform and Pulumi, Spacelift helps teams enforce cost-aware policies directly into their deployment pipelines. This makes sure that every resource created already meets cost and compliance standards.

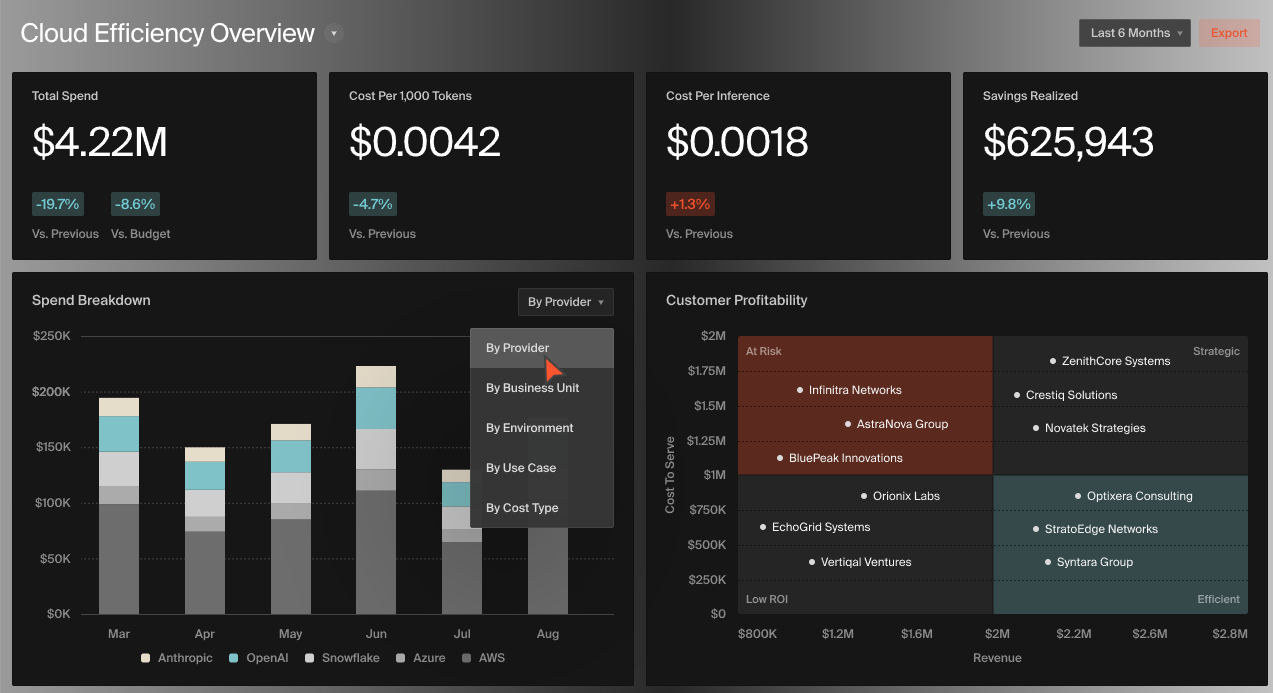

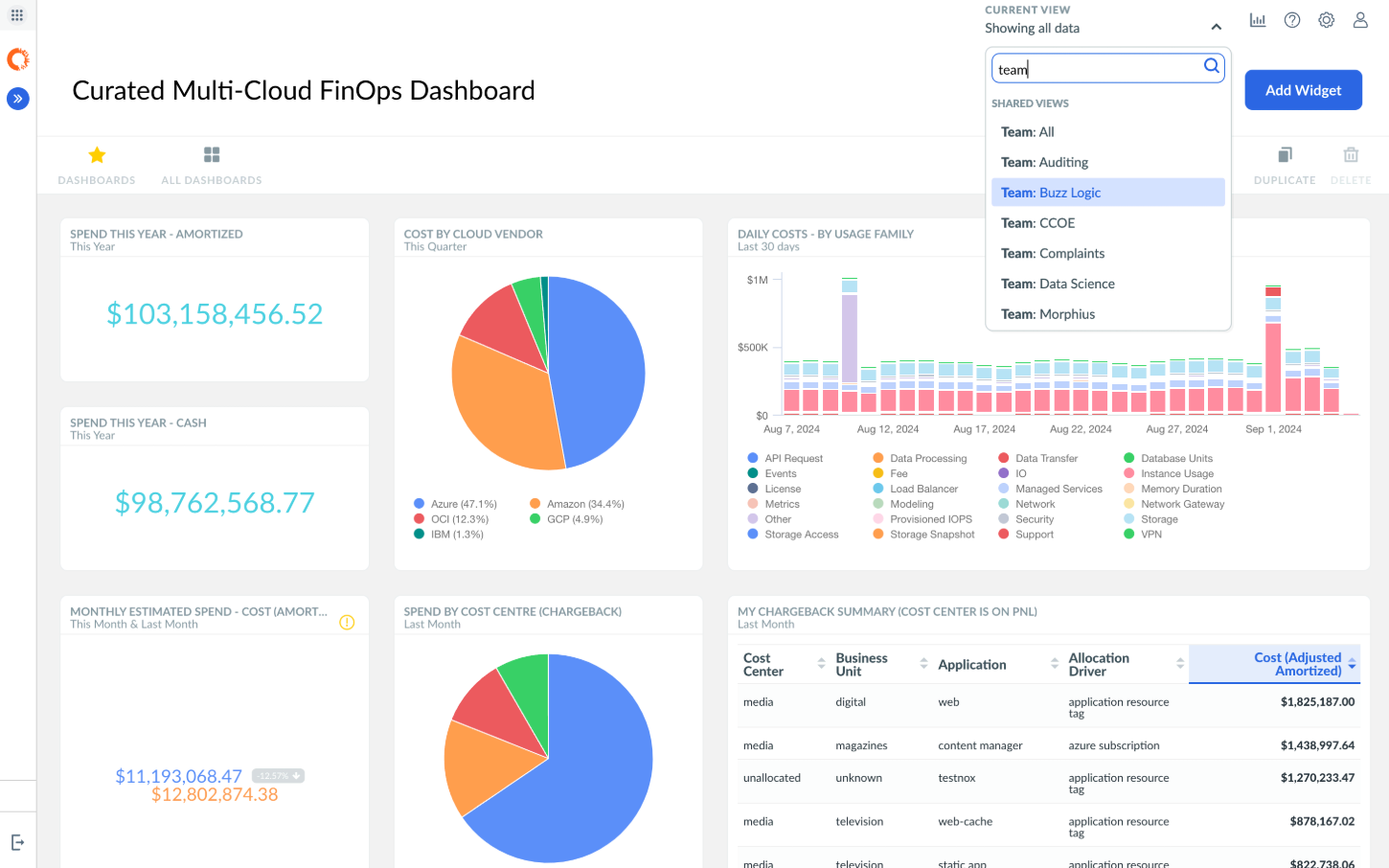

3. CloudZero

CloudZero focuses on connecting cost data with engineering decisions. It helps teams understand why costs are increasing, not just where. By breaking down spending by product, feature, or customer, CloudZero enables cost-aware development and helps SaaS companies calculate unit economics like “cost per transaction” or “cost per user.”

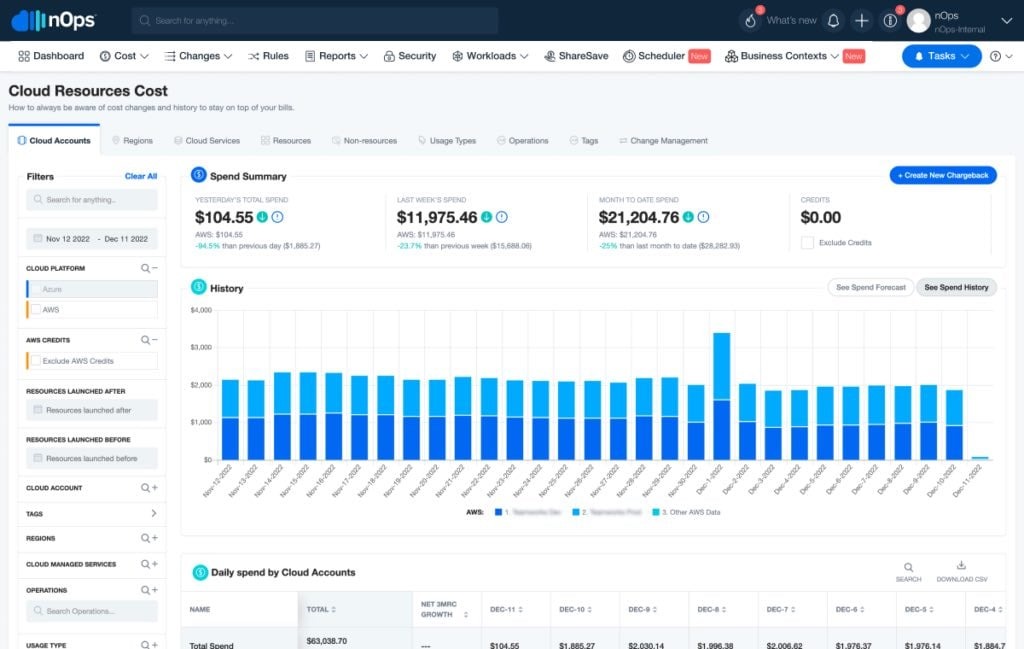

4. nOps

nOps automates cloud cost visibility, governance, and waste detection. It continuously scans environments for idle resources, unattached volumes, and misconfigurations that lead to unnecessary spending.

nOps also integrates tightly with AWS and Kubernetes, helping FinOps teams automatically right-size and tag resources.

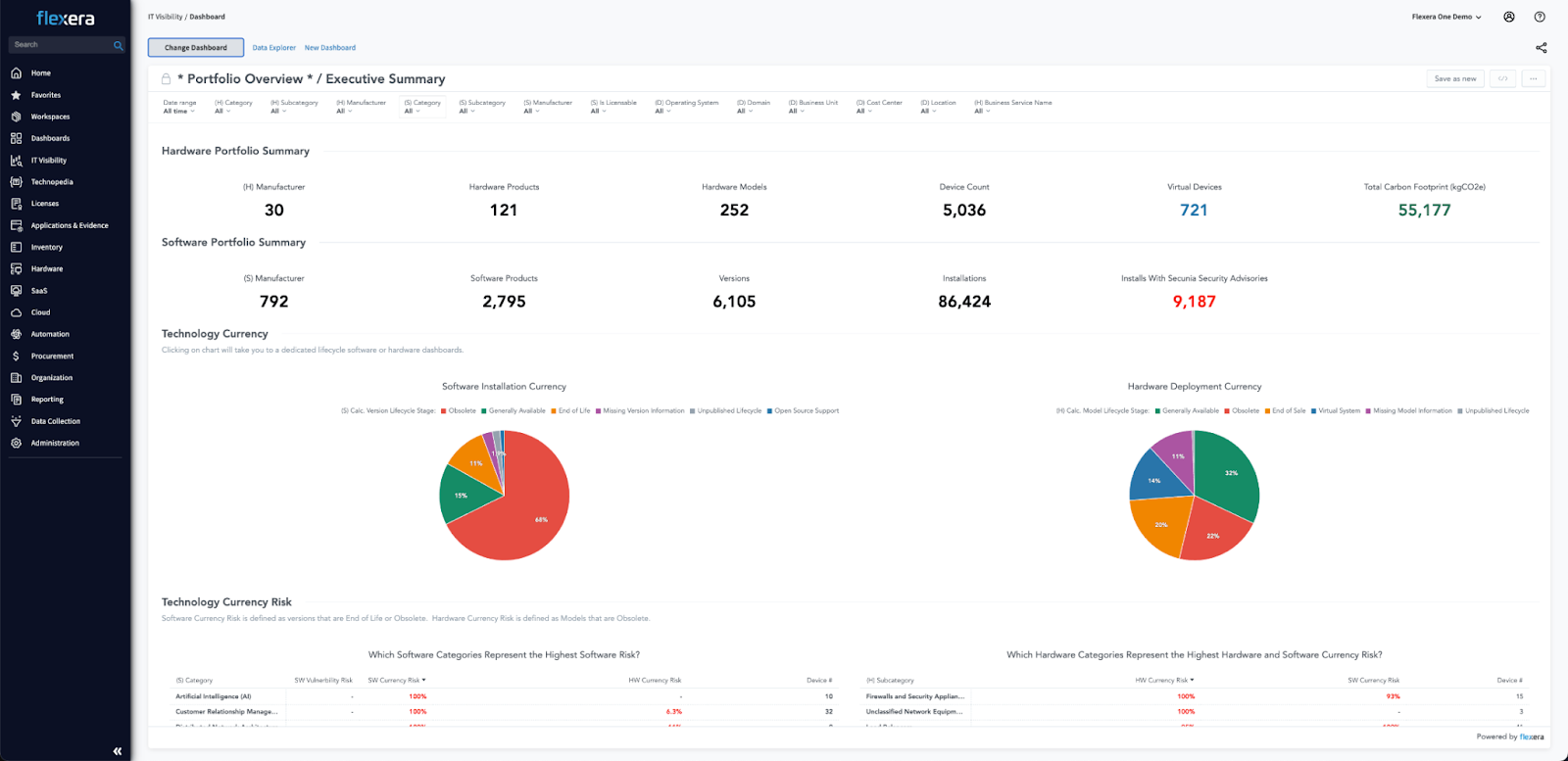

5. Flexera

Flexera offers enterprise-grade cost management, particularly for large multi-cloud environments. It provides unified visibility across AWS, Azure, and GCP accounts, enabling chargeback, showback, and cost forecasting.

Flexera’s strength lies in its governance and financial control features ; perfect for enterprises managing complex cloud portfolios with multiple teams and budgets.

6. Apptio Cloudability

Apptio Cloudability (now part of IBM) is one of the cloud cost optimization platforms. It enables detailed cost allocation, budgeting, forecasting, and FinOps collaboration.

Apptio helps move from cost tracking to cost accountability by connecting cloud spend with business KPIs. It supports multi-cloud visibility, helping teams identify inefficiencies, track commitments, and forecast future usage patterns accurately.

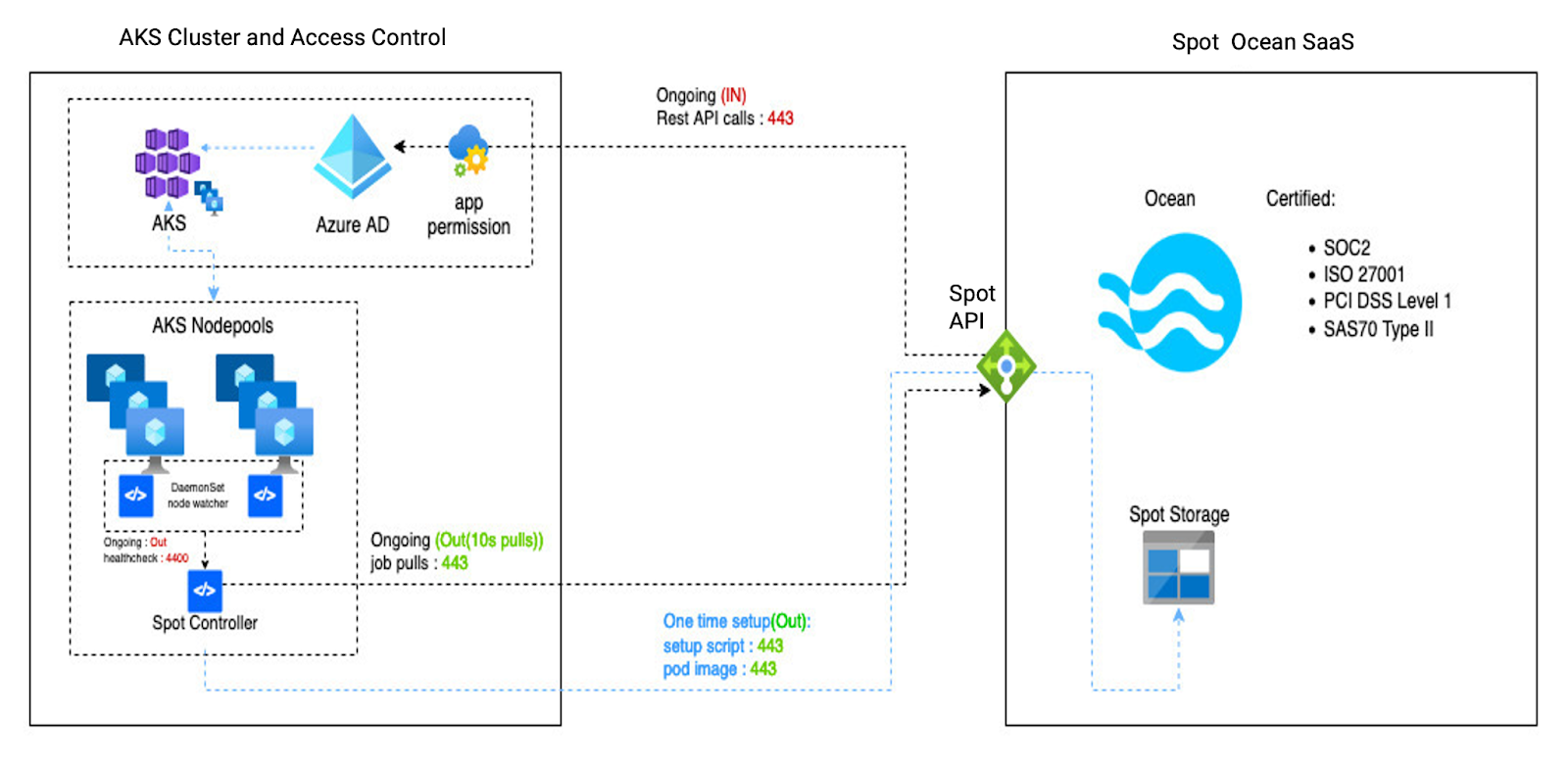

7. Spot.io

Flexera acquired Spot.io. Spot.io specializes in using spot instances and automation to optimize compute spend. Its products, like Elastigroup and Ocean for Kubernetes automatically balance workloads across on-demand and spot capacity.

Spot.io’s continuous optimization engine ensures that workloads always run on the most cost-efficient resources available.

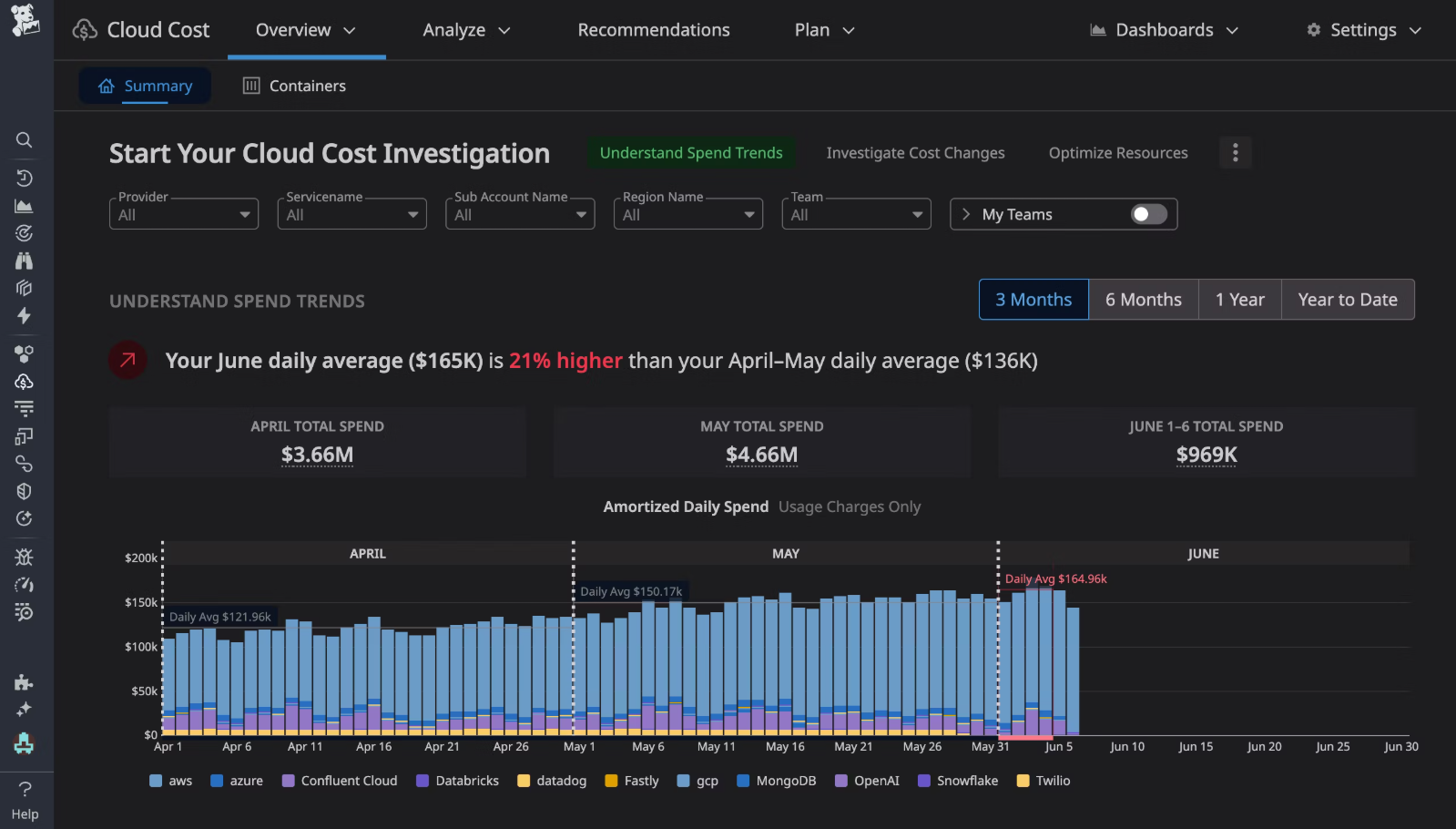

8. Datadog Cloud Cost Management

Datadog brings together performance monitoring and cloud cost optimization in a single platform. By correlating cost data with performance metrics, it helps teams understand if expensive workloads are actually delivering value.

Its AI-driven anomaly detection and predictive analytics also alert teams to unexpected cost spikes, helping teams act before budgets are exceeded.

9. New Relic

New Relic combines observability with cost awareness. Its cloud cost management features help teams track resource usage across environments and correlate cost with application performance. This makes it easier to justify optimization decisions with concrete performance data.

New Relic’s also provide integrations with AWS, Azure, and GCP. Also, it's a good choice for multi-cloud visibility and efficiency.

To summarize:

Cloud cost optimization is a continuous journey, not a task. The goal is to develop habits- viewing costs, taking early actions, and continuously improving.

When optimized correctly, it drives efficiency, promotes sustainability, and creates room in your budget for innovation. All the little things: automating scaling, cleaning up waste, reviewing spending- start to add up in the long run.

So, start small. Automate where possible. Review costs regularly. Over time, these simple actions will prove invaluable in developing an organizational culture of smart cloud expenditure across AWS, Azure, and GCP.