With Prometheus Postgres Exporter as part of your monitoring toolkit, you have the power to gain deeper insights into the performance, health, and behavior of your PostgreSQL databases.

Monitoring is a crucial aspect of maintaining the health and performance of any application. Prometheus is an open-source monitoring and alerting system that helps you track the health and performance of your applications and services. Prometheus Operator is a Kubernetes-native application that simplifies the deployment and management of Prometheus instances. It allows you to define the configuration for your Prometheus instances as Kubernetes resources, which can be version controlled and easily deployed across multiple environments.

In this article, we will focus on setting up Prometheus monitoring for a PostgreSQL database that is running on Amazon Web Services (AWS) Relational Database Service (RDS). AWS RDS provides a managed database service that makes it easy to set up, operate, and scale a relational database in the cloud.

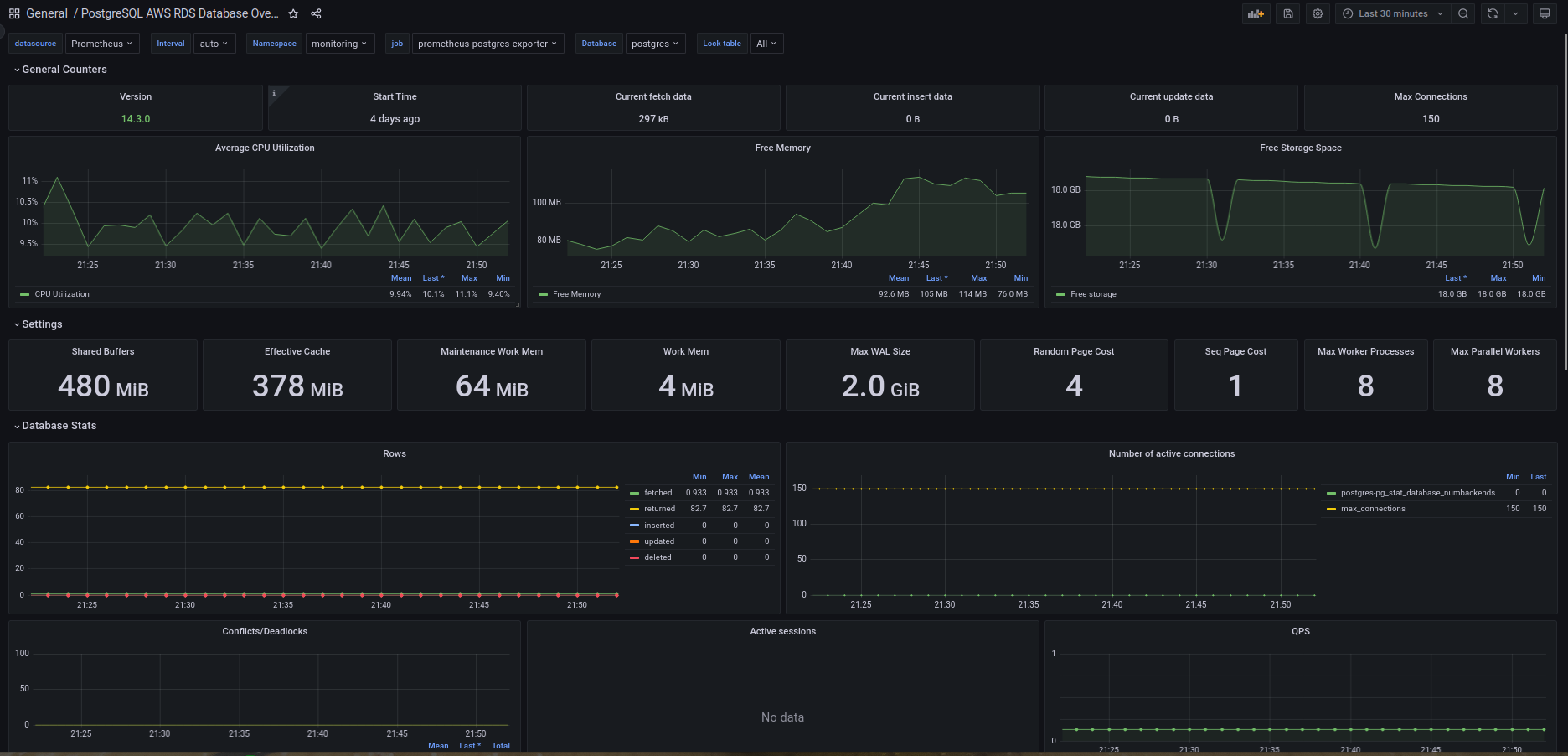

We will cover the steps necessary to configure Prometheus to collect metrics from PostgreSQL, including setting up the necessary exporters and configuring the Prometheus server to scrape those metrics. Additionally, we will discuss how to set up alerts based on those metrics using Prometheus alerting rules. Last, we will show you how to visualize the data in a Grafana dashboard, including a sample dashboard I built that you can easily import.

.png)

Install and configure Prometheus Postgres Exporter

Prometheus Postgres Exporter is a tool used for exporting PostgreSQL database metrics to Prometheus. It's an open-source software that is designed to help users monitor and measure the performance of their PostgreSQL database instances. The Prometheus Postgres Exporter collects metrics from PostgreSQL and provides a web endpoint for Prometheus to scrape. These metrics include database size, transaction rates, and query execution times. With this information, users can gain insight into the health and performance of their database, identify bottlenecks and optimize database performance.

Install and configure Prometheus Cloudwatch Exporter

Prometheus Cloudwatch Exporter is a tool that allows you to collect metrics from AWS CloudWatch and expose them to Prometheus for monitoring and alerting. It enables you to monitor your AWS infrastructure, including EC2 instances, RDS databases, and more, by exporting metrics in a Prometheus-compatible format.

Grafana

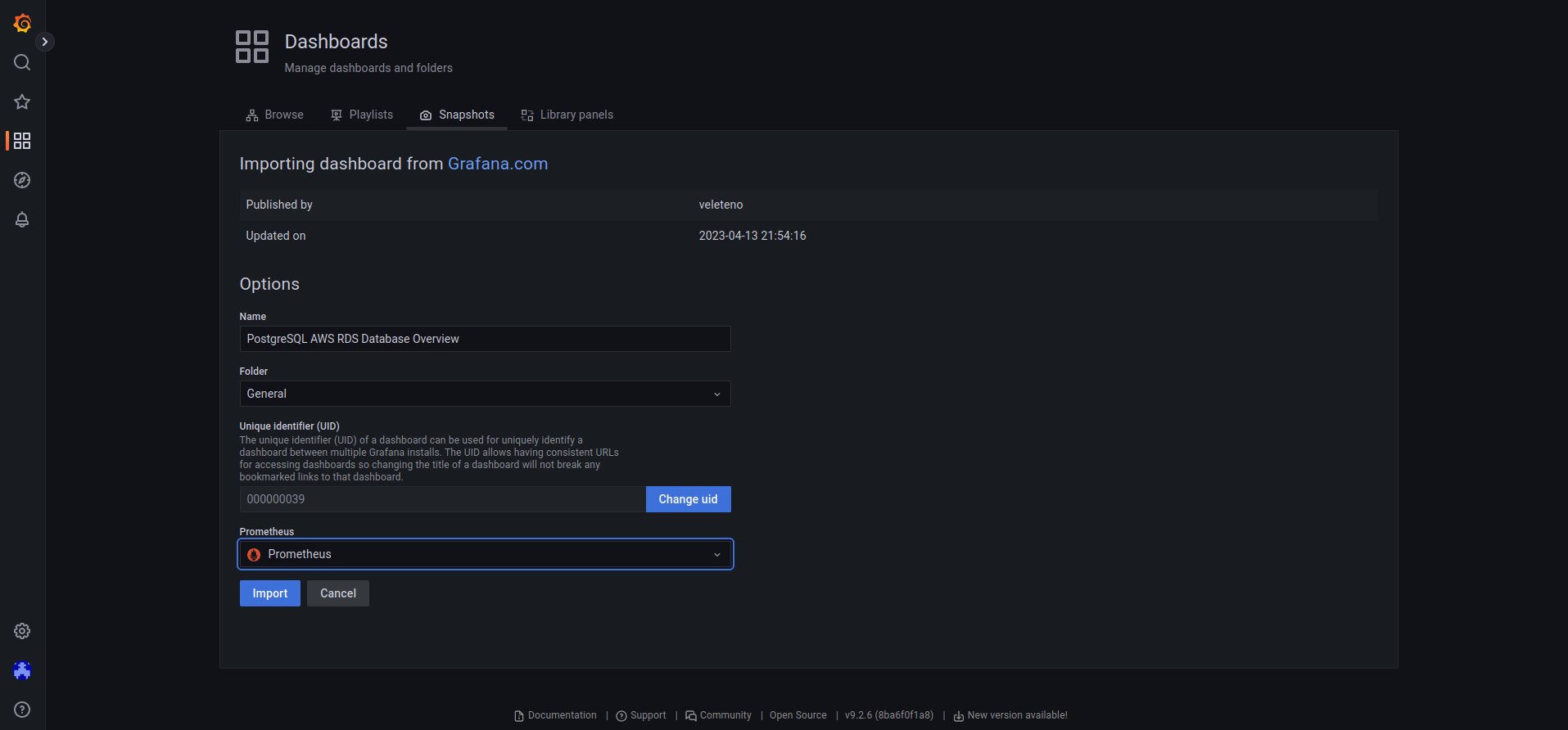

Import previously created dashboard based on the metrics above exporters to the Grafana.

By the end of this article, you will have a fully functioning Prometheus monitoring setup for your PostgreSQL database running on AWS RDS, allowing you to proactively monitor and troubleshoot any issues that may arise.

Prerequisites

Before we begin setting up monitoring, there are a few prerequisites that must be in place.

- First, we need to have a working installation of Prometheus Operator and Grafana. We will be using Helm to install exporters which is a package manager for Kubernetes. If you haven't installed Helm already, you can follow the official documentation to do so.

- Second, we need to have access to our PostgreSQL database running on AWS RDS. We will need the endpoint URL, port number, and login credentials to connect to the database.

- Third, we need to have the appropriate IAM permissions to access the necessary resources in AWS. We will need to create an IAM user with the required permissions, generate an access key and secret access key, and store them securely.

Prometheus Postgres Exporter

1. Add Helm Chart Repository:

2. prefer do not install Helm chart directly from helm repository, before installation I download chart and check what will be installed:

Now in ./prometheus-postgres-exporter/values.yaml you can see all available settings:

3. Create additional values file where we will override default values:

4. Lets modify dev.yaml for our environment:

- Enable serviceMonitor:

- Add PostgreSQL host and user that have permission query pg_stat* (How to create user => postgres_exporter):

- Do not forget set resources, it is very important to set correct requests and limits. Tools like PerfectScale by DoiT can be used to identify the optimal request and limits:

- Enable PrometheusRules:

NOTE: If you use custom labels in Prometheus operator to auto discover serviceMonitor and PrometheusRules do not forget add them. For example:

- Add PrometheusRules, I added 6 but if you need you can add more. The final dev.yaml file should look like this:

5. Now we are ready to install chart:

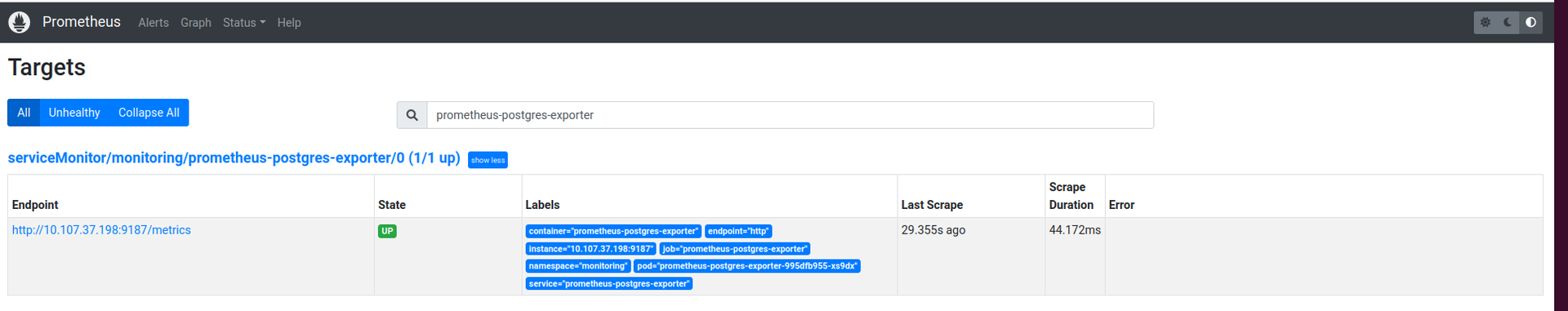

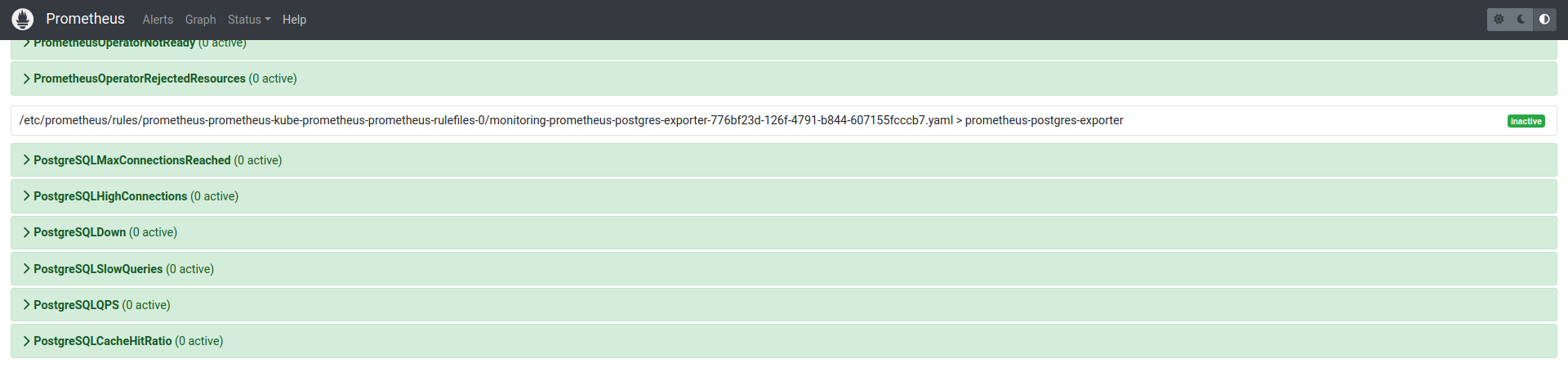

6. In few minutes you will see in the Prometheus additional target and alerts:

Prometheus CloudWatch Exporter

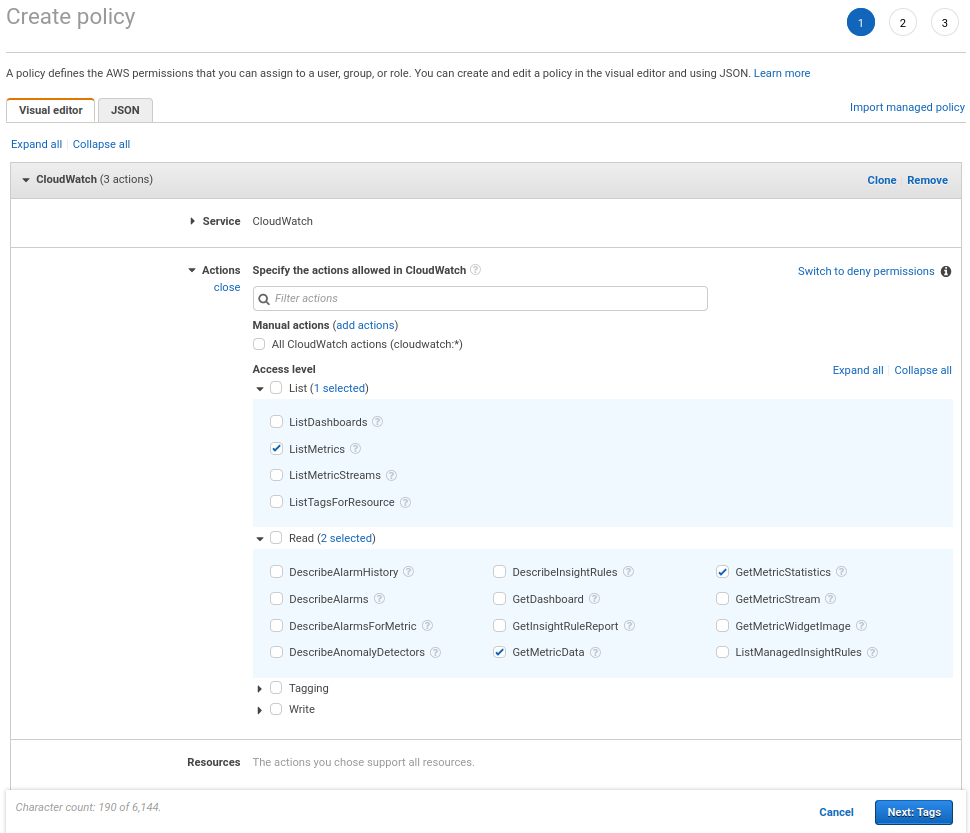

1. First we need create IAM user that can read CloudWatch metrics to get AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY. The cloudwatch:ListMetrics, cloudwatch:GetMetricStatistics and cloudwatch:GetMetricData IAM permissions are required.

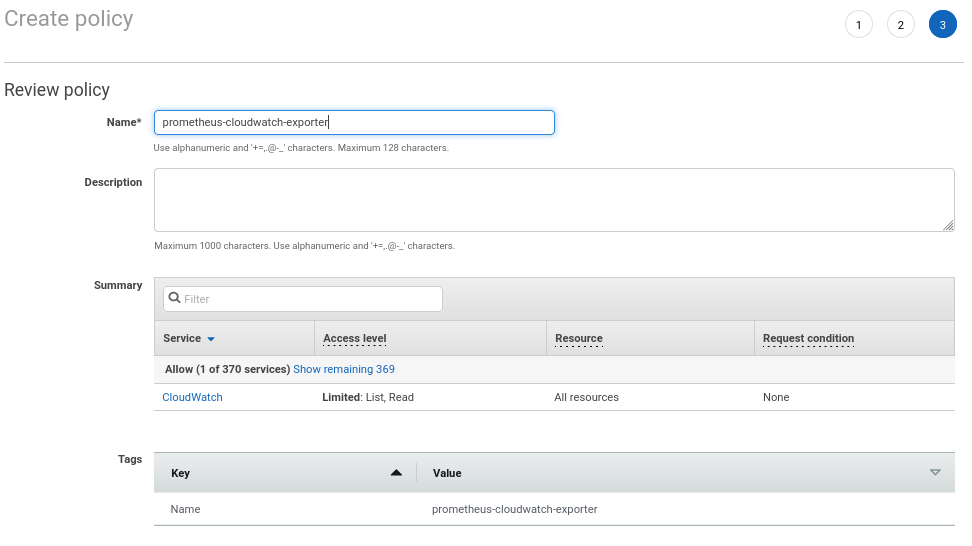

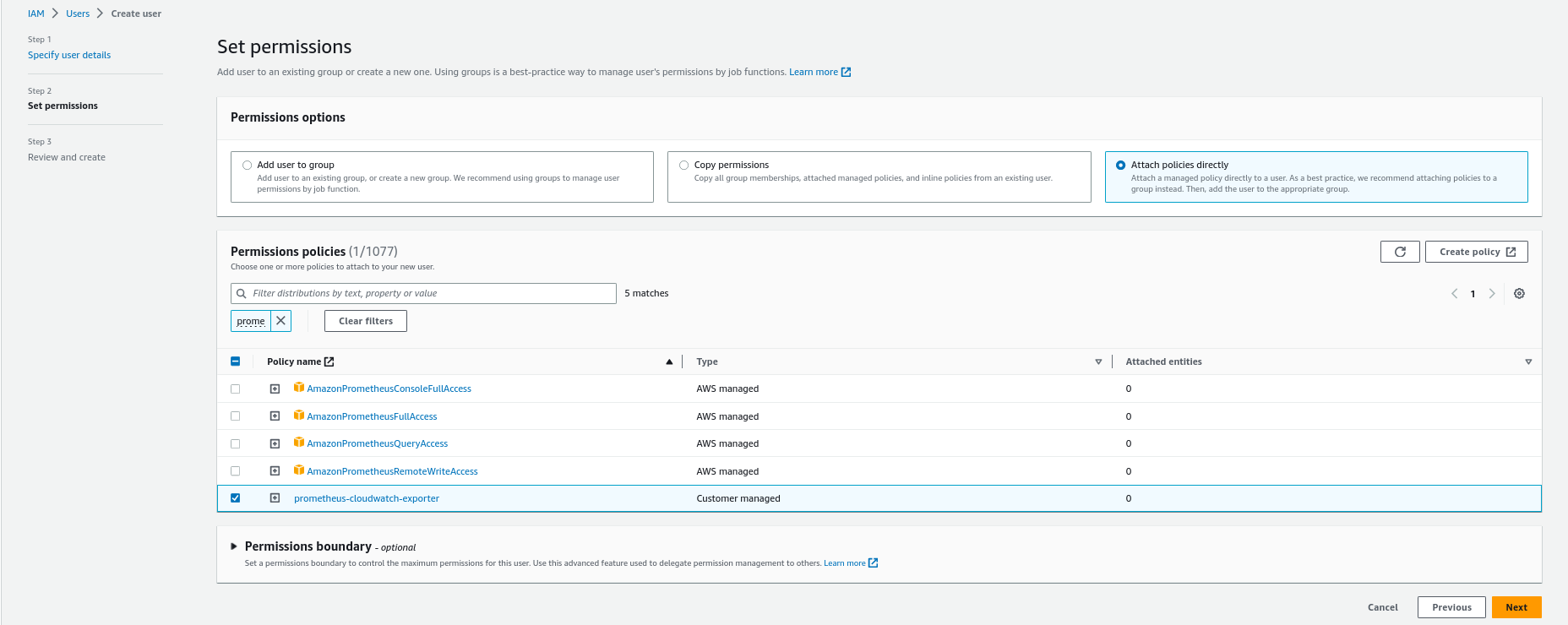

- Create IAM policy “prometheus-cloudwatch-exporter” with the appropriate permissions:

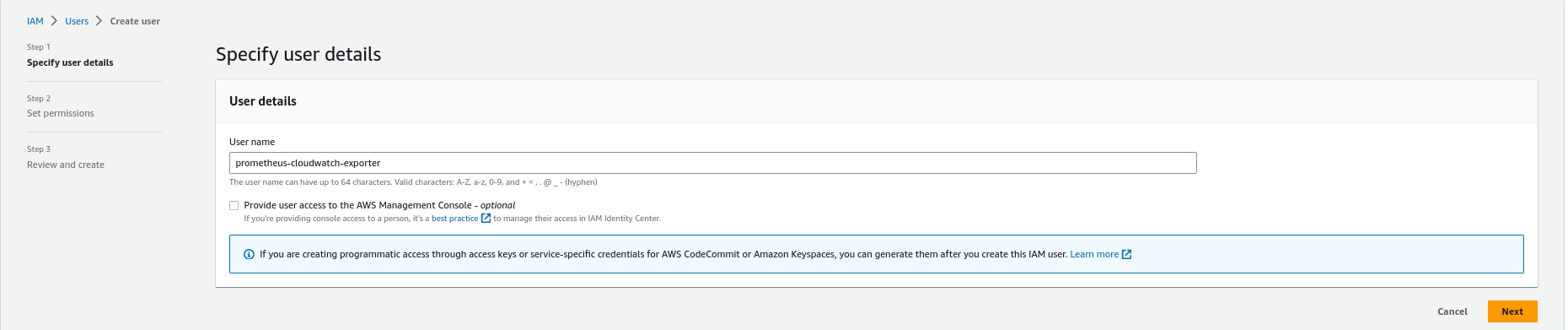

- Create “prometheus-cloudwatch-exporter” IAM user and attach the policy:

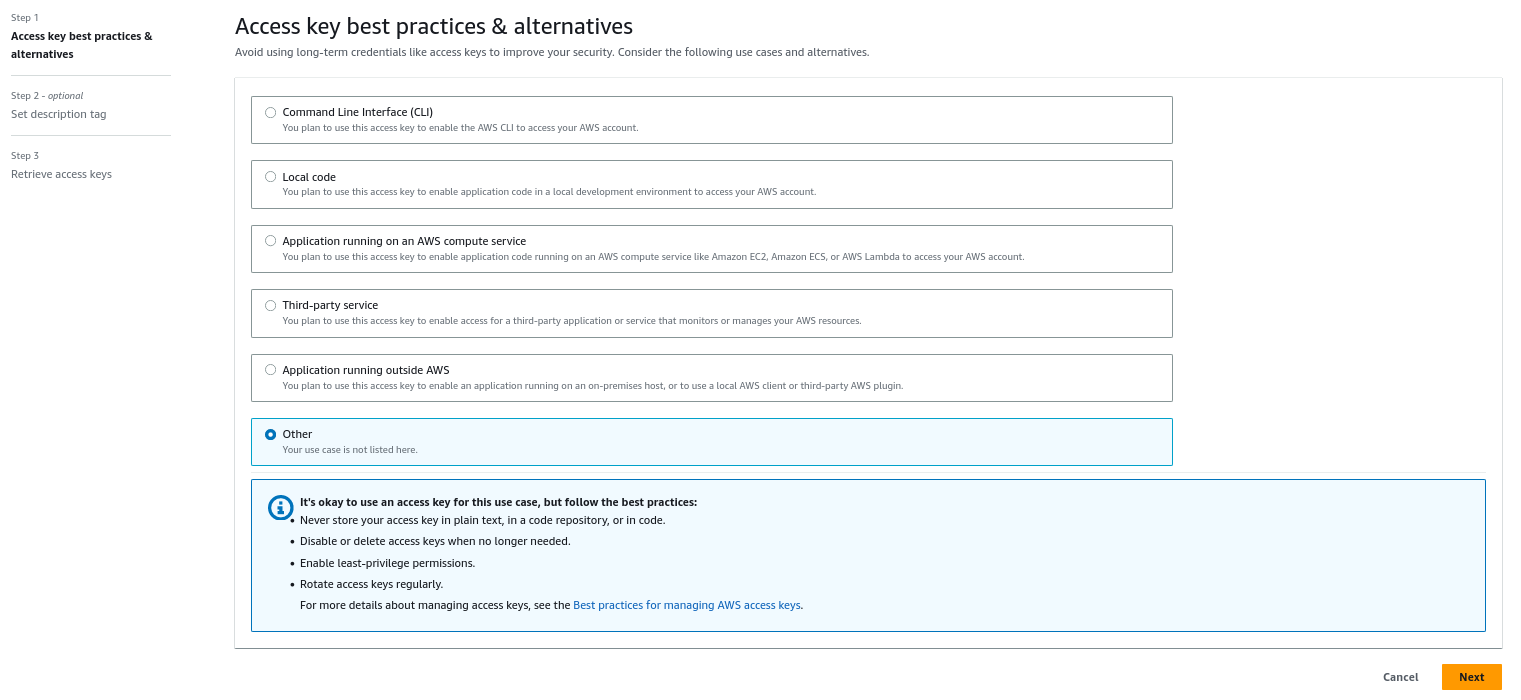

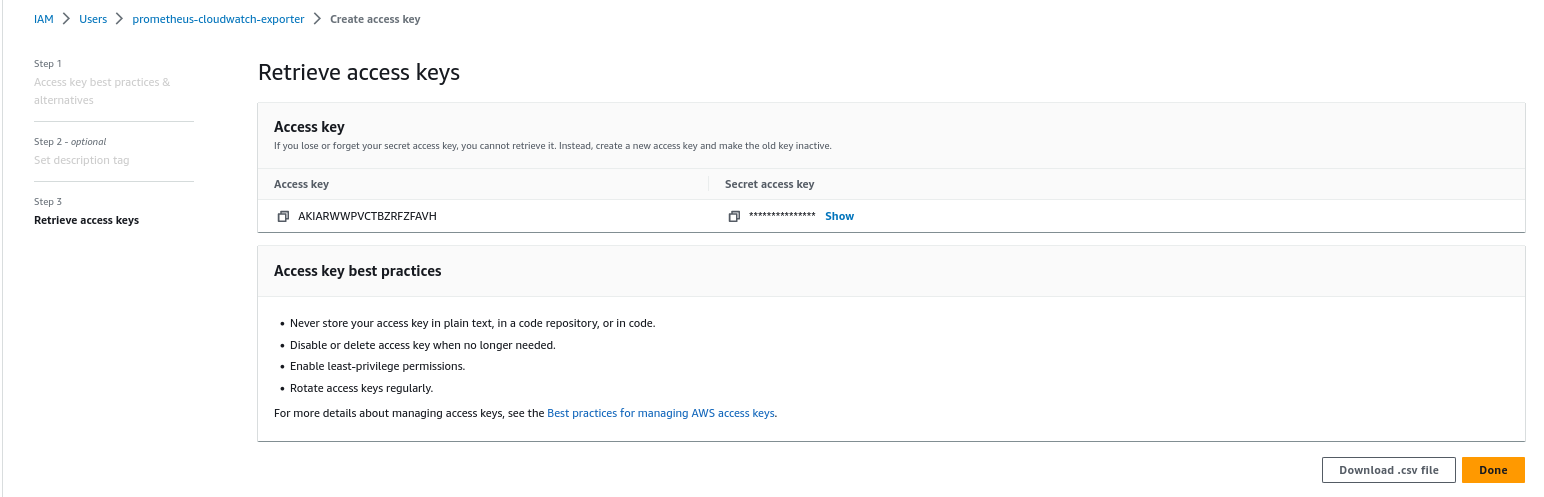

- Generate Access keys for “prometheus-cloudwatch-exporter” IAM user:

2. Download cloudwatch-exporter helm chart:

3. Create additional values file where we will override default values:

4. Edit dev.yaml for our environment:

- Enable serviceMonitor:

- Set resources:

- Add Access Keys that we created before:

- Add config for scraping Storage, Memory and CPU. You can add all metrics that CloudWatch has but every request to Cloudwatch cost money, so I recommend getting only the metrics needed for alerts. For observability, use simple CloudWatch datasource in the Grafana:

*period_seconds - it is how often exporter will request metrics from CloudWatch

*DBInstanceIdentifier: [dev-postgres] - it is name of your RDS instance

- Add prometheusRules and final dev.yaml file will be:

5. Now we are ready to install helm cloudwatch-exporter helm chart:

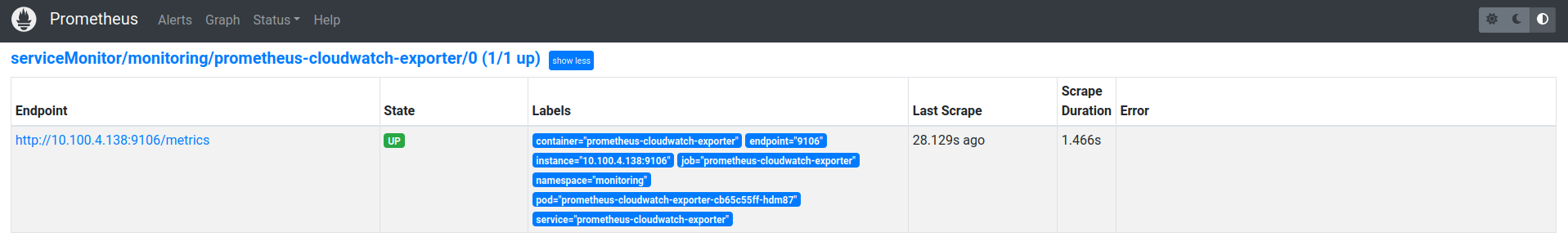

6. Again wait few min and you will see in the Prometheus new target:

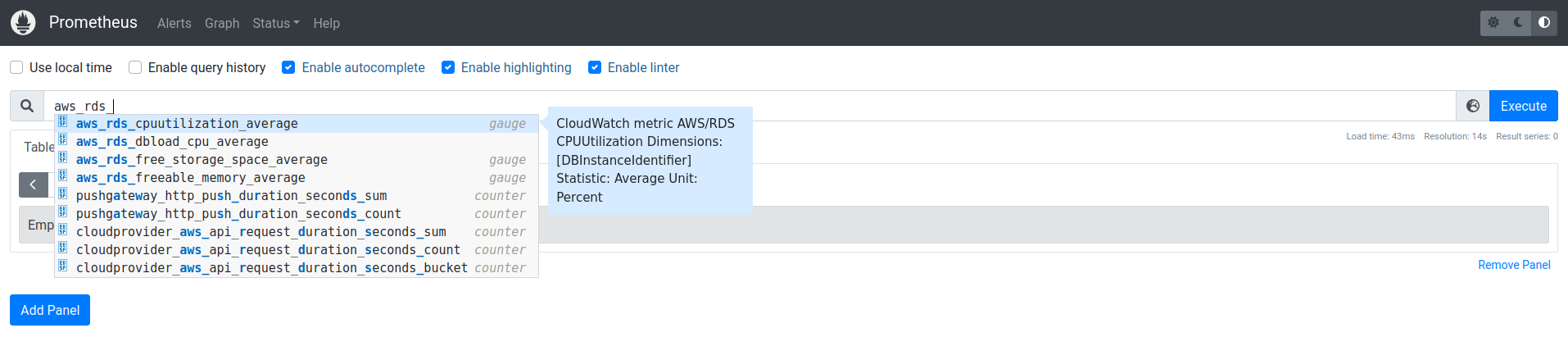

new metrics:

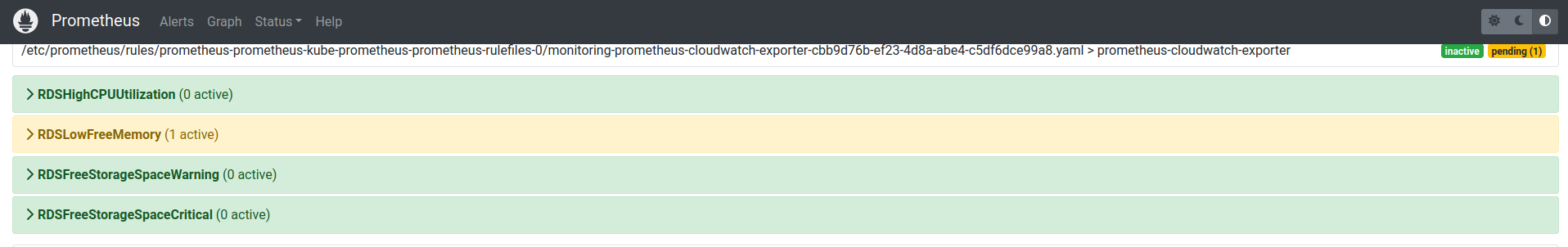

and Alerts

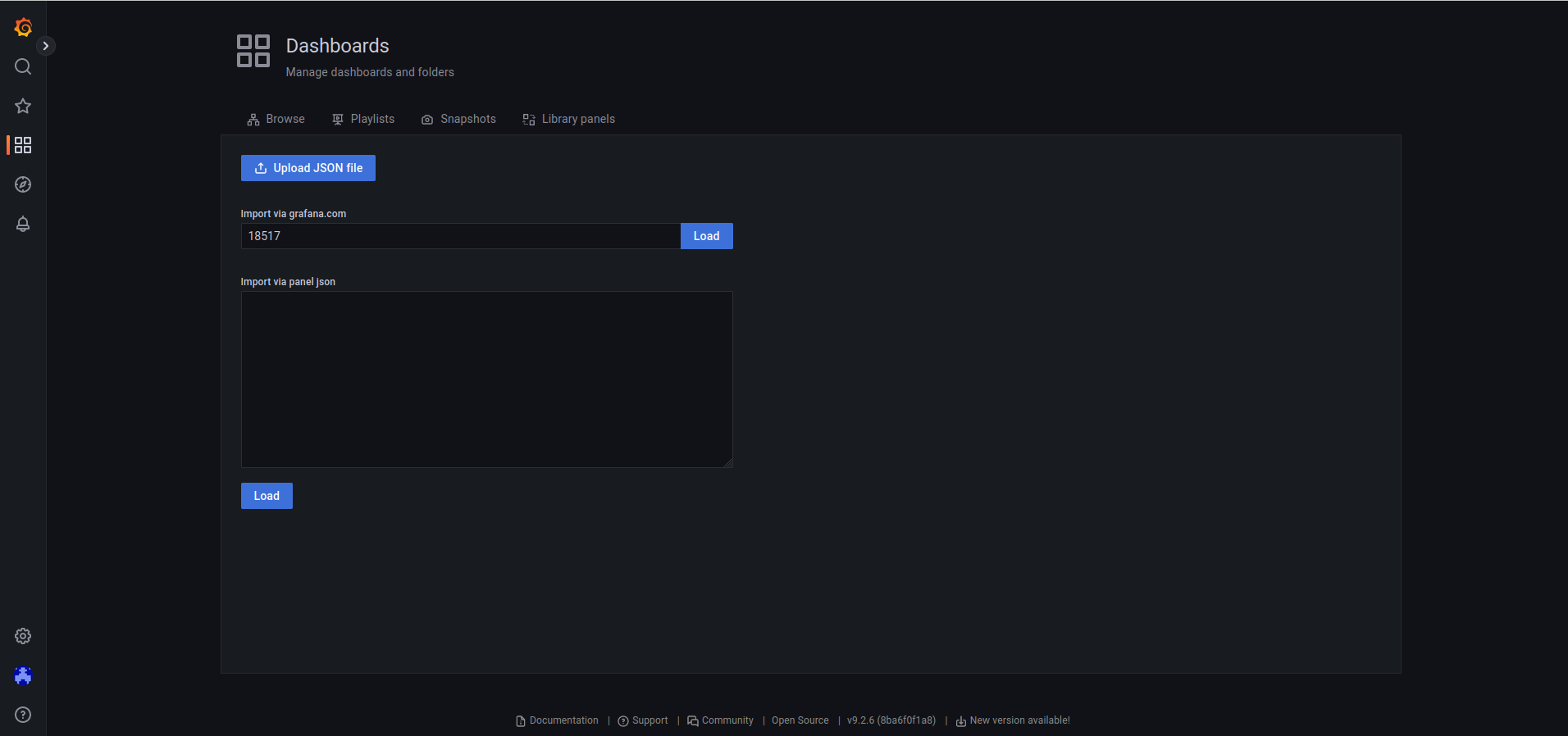

Grafana

Once everything works we can add the dashboard to the Grafana. I uploaded my dashboard to the grafana.com and you can import it with ID 18517.

In conclusion, monitoring your PostgreSQL RDS instance on AWS using Prometheus Operator, exporters, and Grafana dashboard is a best practice that can help you optimize your database's performance, availability, and reliability. By implementing a comprehensive monitoring and alerting system, you can stay on top of critical metrics and identify potential issues before they escalate into bigger problems.

Although the initial setup may seem daunting, the benefits of a well-designed monitoring system outweigh the effort required to set it up. Additionally, the process of regularly reviewing the collected metrics and alerts and fine-tuning the monitoring system is a critical aspect of maintaining optimal database performance.

Now that you've set up comprehensive monitoring for your RDS PostgreSQL database, take the next step in optimizing your entire Kubernetes infrastructure. Don't just monitor your resources – optimize them intelligently. Our advanced algorithms and machine learning techniques ensure your workloads are optimally scaled, reducing waste and cutting costs without compromising performance. Join forward-thinking companies who have already optimized their Kubernetes environments with PerfectScale. Sign up and Book a demo to experience the immediate benefits of automated Kubernetes cost optimization, resource management. Ensure your environment is always perfectly scaled and cost-efficient, even when demand is low.

.png)

.png)

.png)